Nginx can be used as a load balancer to multiple back-end web servers using the proxy functions. This guide will cover the basics of configuring the proxy server to pull from the other web servers. It assumes you already have completed the initial install of Nginx. If you do not already have a install please see Nginx Compile From Source On CentOS.

You will want to create a new configuration (or add to an existing one)

nano /etc/nginx/load-balancer.conf

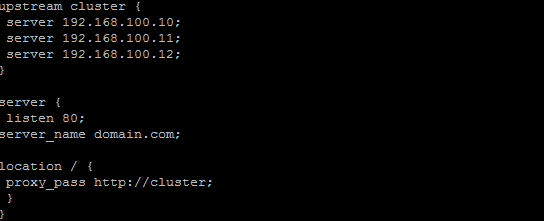

Insert the following:

upstream cluster {

server 192.168.100.10;

server 192.168.100.11;

server 192.168.100.12;

}

server {

listen 80;

server_name domain.com;

location / {

proxy_pass http://cluster;

}

}And save the file. You will want to substitute the 192.168.100.xx IP addresses with addresses of each of the web servers you want to send requests too. Once you have completed the load balancing configuration, you will want to restart Nginx to load the new configuration

service nginx restart

Nginx Load Balancing Methods

There are a couple of different clustering types you can utilize each will direct traffic differently

Round Robin

by default without specifying one, round-robin will be used. Sending each new request to a different server.

upstream cluster {

ip_hash;

server 192.168.100.10;

server 192.168.100.11;

server 192.168.100.12;

}ip_hash will send all subsequent requests from the same IP address to the same server. This is useful if you need sessions to persist on the same server for the same IP address.

Least Connections

upstream cluster {

least_conn;

server 192.168.100.10;

server 192.168.100.11;

server 192.168.100.12;

}least_conn will send new requests to the server with the least amount of active connections. This is useful for balancing each server equally.

Weighted Load Balancing

upstream cluster {

server 192.168.100.10 weight=3;

server 192.168.100.11 weight=1;

server 192.168.100.12 weight=1;

}Specifying a weight will distribute more requests to servers with a higher weight. In the above example for each 5 requests, 3 will be sent to 192.168.100.10, and one request will be sent to each of other 2 servers. This is useful in situations where you might have one server which is more powerful than the others or you would like to do testing and control a portion of traffic going to a specific server.

It works very well for me